Apache SQOOP (SQL-to-Hadoop) is a tool designed to import bulk Data into HDFS from Structured data stores such as Relational databases [RDBMS], enterprise data warehouses, and NoSQL systems.

In beginning of Ingestion Phase, We pull the structured data from RDBMS (Source layer) to HDFS in Hadoop with the help of Data Ingestion Tools (given with example Companies) such as:

a) SQOOP (Bank of America)

b) SPARK (TCS)

c) TALEND (Bank of England)

d) KAFKA (Cap Gemini)

e) Rest API (HCL)

f) SOAP Services

In other words, importing the Data from RDBMS to HDFS in Hadoop system

is called "SQOOP Import Process".

Data in SQOOP will be saved in the form of "Part File".

This is how Sqoop got its name -“SQL to Hadoop & Hadoop to SQL”. Sqoop does not perform any aggregations it just imports and exports the data.

Structured Data in RDBMS can be processed with the help of language

called ‘Structured Query Language’

[SQL].

Applications of RDBMS server:

a) a) Education [Universities]

a) b) Retail [Amazon, Flipkart, Walmart, etc.]

a) c) Automobile [BMW, Audi, etc.]

a) d) Insurance

RDBMS has advantage over DBMS in removing Duplication of Data and

Normalization.

We store the data only in RDBMS because of ACID Properties which is

DML (Insert, Update & Delete) operation.

Different Kinds of RDBMS

server:

a) Oracle RDB

a) b) MySQL

c) c) Postgres

a) d) Teradata

a) e) Netezza

a) f) DB2

a) g) Informix

JDBC (Java Data Base

Connector) is a connector that connects SQOOP to RDBMS Server.

Sqoop is rarely

using now-a-days for data import.

Steps in SQOOP Import Architecture:

a) Create

Record Container Class

Internally, RDBMS is built on SQL while HDFS is built on

JAVA.

We know that SQL is a Query Language while JAVA is a Programming Language.

In RBBMS, ID à

Integer & firstname à

varchar; while in

HDFS, ID à Integer & firstname à string

b) Boundary

Query Creation

Help to run multiple no. of Records (say 1 lac) with the help of Mappers (say 4).

c) Data Import [with available 4 Mappers]

Out of 3, Data Import step will take much time.

Based on the no. of Mappers, SQOOP make same no. of Connectors with

RDBMS to import data.

Default File format of Sqoop Import is textfile through which stored the data in HDFS.

SQOOP Import requires following:

b) Username

c) Password

d) Table Name

e) Table Directory

Let's Workouts with example:

1) 1) Use

retail_db and create tables:

1) 2) Create

and Select the test database:

create database test;

use

test;

1) 3) CREATE

TABLE user_data(userid

INT,firstname VARCHAR(20),lastname VARCHAR(20),city varchar(50),age int,createdt

date,transactamt int);

insert into user_data values(1,'siva','Raj','chennai',33,'2015-09-20',100000);

insert into user_data

values(2,'Prasad','babu','chennai',33,'2015-09-21',10000);

insert into user_data

values(3,'harish','kumar','banglore',39,'2015 -09-23',90000);

insert into user_data values(4,'azar','imran','hyderabad',33,'2015-09-24',1000);

insert into user_data values(5,'Kiran','kumar','chennai',23,'2015-09-20',200000);

1) 4) Select * from

user_data; [table name]

Above data will be store in RDBMS.

Host Id will be given by Client.

Check with HDFS:

Cloudera is a single node cluster while Cloudxlab is a 5 node cluster.

1) 5) Import

Table from SQL to HDFS:

A)a) sqoop import --connect jdbc:mysql://localhost/test --username root --password cloudera --table user_data -m 1; hadoop fs -rm -r /user/cloudera/user_data

Map Reduce will run:

b) sqoop import --connect

jdbc:mysql://localhost/test --username root --password cloudera --table user_data -m 1; --target-dir /user/cloudera/second_imp

Data will be stored in the HDFS in the form of file system call “Part File”.

c) sqoop import --connect

jdbc:mysql://localhost/test --username root --password cloudera -table user_data -m 1 --direct; hadoop fs -rm

-r /user/cloudera/user_data

In entire, Sqoop only Mapper will run not Reducer.

Success Marker tells whether your job done successfully or not.

Success marker only run once.

f) Import with --split-by option

If you want to use more than 1 Mapper, then use - -Split-by along with custid (column) command.

If we create a table with Primary Key, then no need to mention ‘Split-by option’.

Primary Key in Table inside RDBMS split the data to HDFS even without

using the command ‘split-by’.

--direct is Sqoop import property.

g) Sqoop

Eval:

Using Sqoop, we can see the Data present in MySQL server from the Edge

Node using the command called ‘Sqoop

Eval’. We can insert and update data using command ‘sqoop eval’.

h) SQOOP

DATA: In Real Time, we have 2

types of Data:

A) A) Static Data [new record everyday]

A) B) Dynamic Data / Delta Data / Incremental Modified

Data which is CDC (Change Data Capture)

A) A) Static Data:

New Records data added every day is called ‘Static Data’ which cannot

be modified.

Static Data in Sqoop can be

modified using Incremental Append

which takes from ‘0’ value of cust / user id – 1.

Example:

Execute below insert in mysql:

Add new 7th Row:

First press “Ctrl C” to exit from mysql and give below command in

Cloudera:

sqoop import --connect jdbc:mysql://localhost/test --username root --password cloudera -table user_data -m 1 --target-dir incrimport --incremental append --check-column custid --last-value 6

Now again enter into mysql:

a) Check Column

b) Last Value

By giving “Last Value” number (user id / cust id), the particular row will get import into HDFS through Sqoop import. Finally, all the records got imported to Hadoop HDFS.

Use “Sqoop Job” in order to automate the Last Value (‘0’ only) as problem with the Incremental Append is that every time we need to change the Last Value.

i) Create Sqoop Job First:

sqoop job --create Class10_SqoopJb -- import --connect

jdbc:mysql://localhost/test --username root --password cloudera -table user_data -m 1 --target-dir incrimport

--incremental append --check-column custid --last-value 0

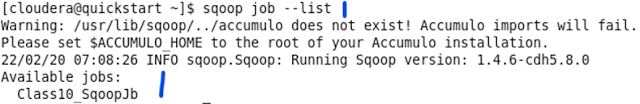

ii) Now List the Sqoop Job using - -list command:

[cloudera@quickstart ~]$ cd .sqoop

[cloudera@quickstart ~]$ echo -n "cloudera" >

sqp.password

To check last lines, give below command:

Give below

command, to read the password file only by you:

[cloudera@quickstart ~]$ chmod 400 sqp.password

See above, only we can read the password file. Finally move the

password file to HDFS using “put” command.

A) B) Dynamic Data:

Dynamic Data in

Sqoop can handle modified data (Delta Load) on day to day basis by

using command “- -incremental lastmodified’

where Reducer (instead of Mapper)

will run the process in HDFS during import.

Sequence File is the Binary

format where humans cannot read the data which used to store the Key Value

Pairs.

Command: - -as-avrodatafile

2 types of Data Standardization in AVRO:

a) a) AVRO SCHEMA (Human readable data)

a) b) AVRO DATA (in Binary format)

Parquet file format use by

Spark to store data is developed by Cloudera together with Twitter.

All our Outputs get store in Parquet file format which is also called

Columnar Storage where retrieval is very fast.

So far, we have learned Sqoop import of one (1) table only.

There is a command for Sqoop import

of all tables (say more than 1 table).

The Sqoop

import-all-tables are a tool for importing a set of tables from the

relational database to the Hadoop Distributed File System. On importing the set

of tables, the data from each table is stored in the separate directory in HDFS.

sqoop import-all-tables

To import Single Table – Target

Directory is in use.

To import Multiple Tables – Warehouse

Directory should be use.

j) SQOOP EXPORT is transferring data from HDFS to RDBMS using PYSPARK.

Sqoop Export has 3 steps:

a) a) Record

Container Class [HDFS (Java) file to RDBMS (SQL) Database]

a) b) Mapper Launch (1 mapper for every

Block)

1 GB à

1*1024/128 = 8 Blocks – 8 mappers to transfer the data.

c) Data Export will take place.

sqoop export --connect jdbc:mysql://localhost/test --username root

--password cloudera --table customer_data --export-dir imp_del;

Saving the data in RDBMS is called ‘Committing’ the Data.

Data from HDFS (part file) first export to Staging table then to Production table. This process is called ‘Transactionality’.

No comments:

Post a Comment