Shared variables are second abstraction in Spark after RDDs that can be used in parallel operations. By default, when Spark runs a function in parallel as a set of tasks on different nodes, it ships a copy of each variable used in the function to each task. Sometimes, a variable needs to be shared across tasks, or between tasks and the driver program.

a) Accumulators: This is a special kind of variable to aggregate the data to count the error records in particular dataset. There won’t be any shuffling here.

The Core which involves RDD will be evaluated in Executor while Core which involves Accumulator will be executed in Driver.

a) Broadcast variables:

Spark Static Allocation:

1

Thread / Task can handle 40MB to 64 MB of data.

1

CPU Core = 4 Threads

Each

Executor can have maximum 5 Cores.

To

handle 10 GB of Data à 80 Blocks

à 160 Tasks à 40 CPU Cores à 8 Executors

1

Executor can handle 16 threads à 16 * 64 =

1024 MB à 1 GB + 500MB (Overhead), so each

executor requires 2 GB of RAM Size.

Spark Dynamic Allocation:

Memory

Levels in Spark are

a)

Cache (default) – MEMORY_ONLY

b)

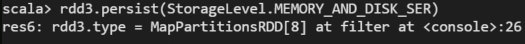

Persist

c)

MEMORY_ONLY_SERIALIZATION

d)

Memory and Disk

e)

MEMORY_AND_DISK_SERIALIZATION

f)

OFF_HEAP

No comments:

Post a Comment